The future of 3D graphics

Following on from the demonstrations, Jen-Hsun Huang then invited Tony Tamasi, Vice President of Technical Marketing, up on stage to talk about the future of 3D graphics according to Nvidia.Tamasi first talked about all of the techniques used in today's 3D graphics engines and described them as a collection of tools for developers to use – this conveniently brought him onto ray tracing. He reminded analysts that mental ray, a ray tracing engine used in many motion pictures is an IP that belongs to mental images, a wholly owned subsidiary of Nvidia's.

He described ray tracing as "another tool in the toolbox," before Huang butted in again to make it clear that Nvidia loves ray tracing (as evidenced by the acquisition of mental images late in 2007). "We love ray tracing – we love a screw driver. We just hope that's not the only tool in our bag. The goal is not the screw driver, the goal is to build an experience," said Huang.

Tamasi then went onto talk about some of the other next-generation effects he expects to see being used in the very near future – these include geometry synthesis and subdivision surfaces. The former is used for procedurally generated hair because hair is an incredibly computationally intensive task, while the latter is designed to deliver the next level of fidelity to models by mathematically describe the character. This sounds a lot like tessellation in many respects.

"[From Nvidia's perspective] the vision of the future is not disjointed – it's rasterisation and ray tracing," said Tamasi. "There will be graphics and programmability using C or C++ . . . . It's specialised graphics processing and fully generalised processing.

"All of these things are going to be required in the future of visual computing. Any one of them by themselves is absolutely not the right answer. Dedicated physics processing chips alone weren't the right answer – they were a part of the answer, but not the only answer. Ray tracing alone is a part of the answer, but not the whole answer. The answer is a synthesis of all of these things – an evolution of where we are today, picking up all the functionality," he finished.

Once Tamasi had finished, Huang piped up and reminded analysts that "Larrabee is a PowerPoint slide and every PowerPoint slide is perfect." Of course, Larrabee isn't finished yet, but calling it a PowerPoint slide is pretty direct – but I guess Huang held true to his earlier 'can of whoop ass' statement. "We've competed against a lot of PowerPoint slides over the years," he added.

"My guess is—and this is for [Intel] to prove—that an Itanium approach is not a smart approach. Leaving the industry and all of its legacy, all of the investment, all of its software that its developed with a fundamentally new processor architecture with no recognition of rasterisation is a horrible idea. It's just a horrible idea," Huang continued.

Things then took a remarkable turn, and it was as if Jen-Hsun was giving lessons to Intel on how to build its entry into the graphics industry as he continued on with his diatribe. "You guys [at Intel] are going to have to give me an example of a good idea where billions of dollars of investment around a particular architecture was somehow irrelevant and you've got to start all over again. I don't know what you would use that processor for the first day you bring it home."

"We happen to believe that you don't have to make a tradeoff, you can have your cake and eat it too," continued Huang. "Build on the current GPU, add computational capability to it, add geometry synthesis to it, add subdivision surfaces to it – add all of these capabilities to each and every generation and leave no application behind. And don't pull an Itanium."

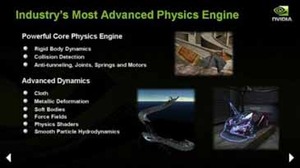

Manju Hegde, former CEO of Ageia, then came to the stage to talk about the future of PhysX following Nvidia's recent acquisition of Ageia. Huang described GPU accelerated physics as the second killer application this year. "In my estimation, this will be the second killer app this year. The first one is video editing—we're going to speed that sucker up by 10 or 20 times—and the second will be physics."

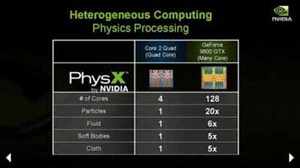

Hegde said that PhysX was the most pervasive and advanced physics API on the planet, claiming that it's featured in at least 140 titles across all platforms. He also revealed that the PhysX SDK had been completely ported over to CUDA, therefore enabling GPU accelerated physics, in just one month and he claimed that there has been an exponential increase in developer adoption because of >50m installed base of CUDA-ready GPUs.

Whichever way you look at it, physics is going to be an increasingly more important part of the future – we've seen some titles start to really use physics effectively in the past year or so, but we expect that to increase now that there is more horsepower available. Of course, we've not been the biggest fans of PhysX in the past—mainly because of the fact you needed additional hardware to enjoy the real benefits of Ageia's technology, which meant that the effects were usually limited to 'special' levels that nobody without a PhysX card played due to sub-par performance—but this is shaping up to be quite a smart acquisition by Nvidia.

There's still a question mark in my mind though, and that's the ubiquity of CUDA-based GPUs. The code can of course run on the CPU if there isn't a CUDA-ready GPU available in the system, but given the ubiquity of multi-core CPUs, it's impossible to say which way this battle will go. Let's forget about Intel for the moment, because I'm not sure AMD would be too happy about not having GPU accelerated physics in the future. Could we be returning to a situation where certain features of games (or even entire games) will be vendor-specific again like they were in the late 1990s? I really hope not.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.